#MSDYN365BC: Building a Development Environment for Microsoft Dynamics GP ISVs - Installing Visual Studio Code

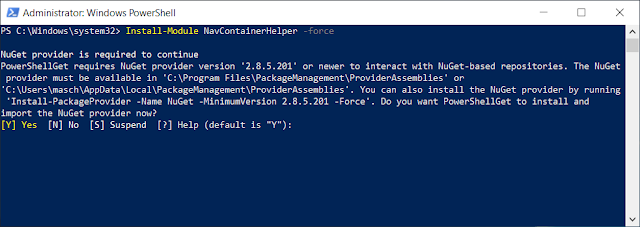

In the previous 3 articles of the series, I talked about the rationale for selecting a container based environment for development purposes, we also installed Docker and downloaded and installed the Microsoft Dynamics 365 Business Central containers for Docker. This then set us on a path to installing the development IDE and selecting a source code control provider to host our AL solutions. See: #MSDYN365BC: Building a Development Environment for Microsoft Dynamics GP ISVs Part 1/3 #MSDYN365BC: Building a Development Environment for Microsoft Dynamics GP ISVs Part 2/3 #MSDYN365BC: Building a Development Environment for Microsoft Dynamics GP ISVs Part 3/3 This article in particular, will focus on the installation of Visual Studio Code (VS Code) and the AL language extensions for the environment. Installing VS Code VS Code is to BC developers what Dexterity is to Dynamics GP developers. VS Code provides the IDE required to incorporate the AL language extensions to develo...